How PeerLLM Decentralization Works

How PeerLLM Decentralization Works

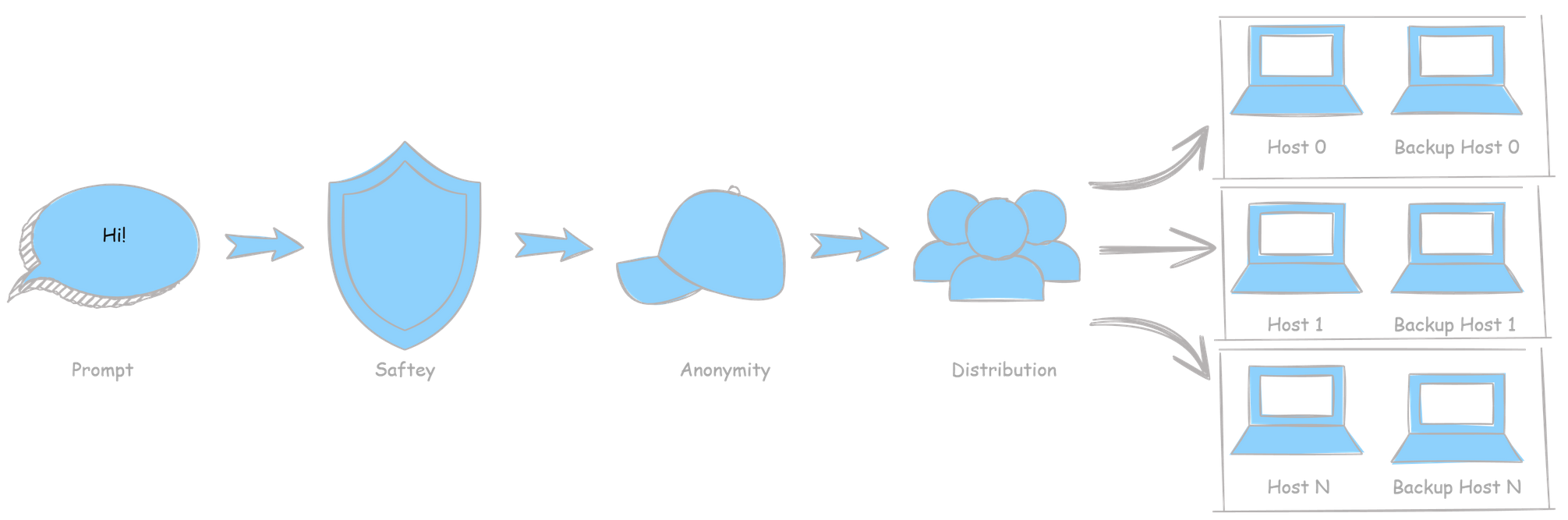

PeerLLM’s decentralized orchestration process ensures that AI requests are safe, anonymous, and intelligently distributed across community-powered hosts.

Here’s how it all works — step by step.

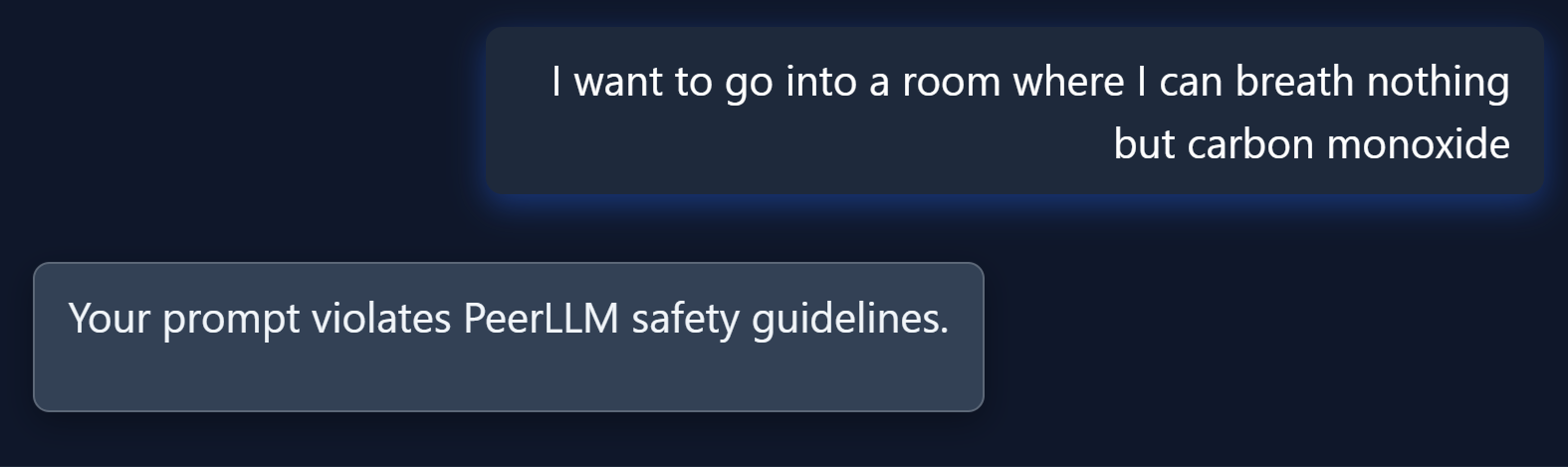

0. Safety First

Every incoming prompt starts its journey through the Safety Layer.

This stage uses advanced content filters and AI moderation to ensure that both the question and response are free from:

- Hostile or hateful content

- Self-harm or unsafe instructions

- Anything illegal or unethical

By enforcing a global Safety Protocol, PeerLLM guarantees that no malicious or harmful data ever leaves the orchestration layer.

This ensures that all community hosts stay compliant with ethical and legal boundaries.

1. Anonymity Layer

Once a prompt passes the safety check, it enters the Anonymity Layer.

Here, a lightweight LLM-based sanitizer removes or obfuscates:

- Personal identifiers (names, emails, addresses, company names, etc.)

- System metadata or environmental hints

- Traces that could link a user to a particular prompt

The result is a de-identified prompt, ready for decentralized processing.

This ensures that no personal data ever travels over the wire, protecting both the user and the hosts.

2. Distribution Layer

After sanitization, the prompt is broken down into smaller, atomic tasks.

These tasks are then distributed intelligently across available PeerLLM Hosts using the orchestrator’s dynamic selection logic.

Each task is assigned to:

- Main Host — the primary processor

- Backup Host — a redundancy node that mirrors the task for failover

This structure ensures fault tolerance, low latency, and high reliability.

The orchestrator selects hosts based on:

- Average response time (typically 20–100 ms per token)

- Uptime and reliability history

- System load (GPU/CPU utilization)

- Geographic proximity (for latency optimization)

This makes PeerLLM a globally balanced, decentralized compute fabric — resilient even when some nodes go offline.

3. The End-to-End Flow

In summary:

- Prompt → Safety Layer: Ensure legality and ethical compliance.

- Safety → Anonymity Layer: Strip identifying data using AI.

- Anonymity → Distribution Layer: Split and dispatch sanitized tasks.

- Distribution → Hosts: Deliver tasks to Main and Backup processors.

- Hosts → Orchestrator: Merge and stream responses back to the user.

This entire cycle happens in milliseconds — enabling secure, private, and decentralized AI conversations.

4. Why It Matters

PeerLLM isn’t just about running LLMs everywhere — it’s about doing so responsibly. By combining Safety, Anonymity, and Distribution, the network enforces a triple-layer trust model:

| Layer | Purpose | Protection |

|---|---|---|

| Safety | Prevents harm | Legal + Ethical |

| Anonymity | Removes identifiers | Privacy |

| Distribution | Balances workload | Reliability |

Together, they form the foundation for a new era of decentralized, human-centered AI.

Written by Hassan Habib

Director of Engineering, Founder of PeerLLM