Announcing PeerLLM Host v0.9.10

Announcing PeerLLM Host v0.9.10

Today, we’re excited to release PeerLLM Host v0.9.10 — one of our most significant updates yet. This version brings accounts, host linking, a redesigned model experience, an OpenAI-compatible endpoint, and major performance improvements across the platform.

This release marks a major step toward a fully decentralized, contributor-powered AI network.

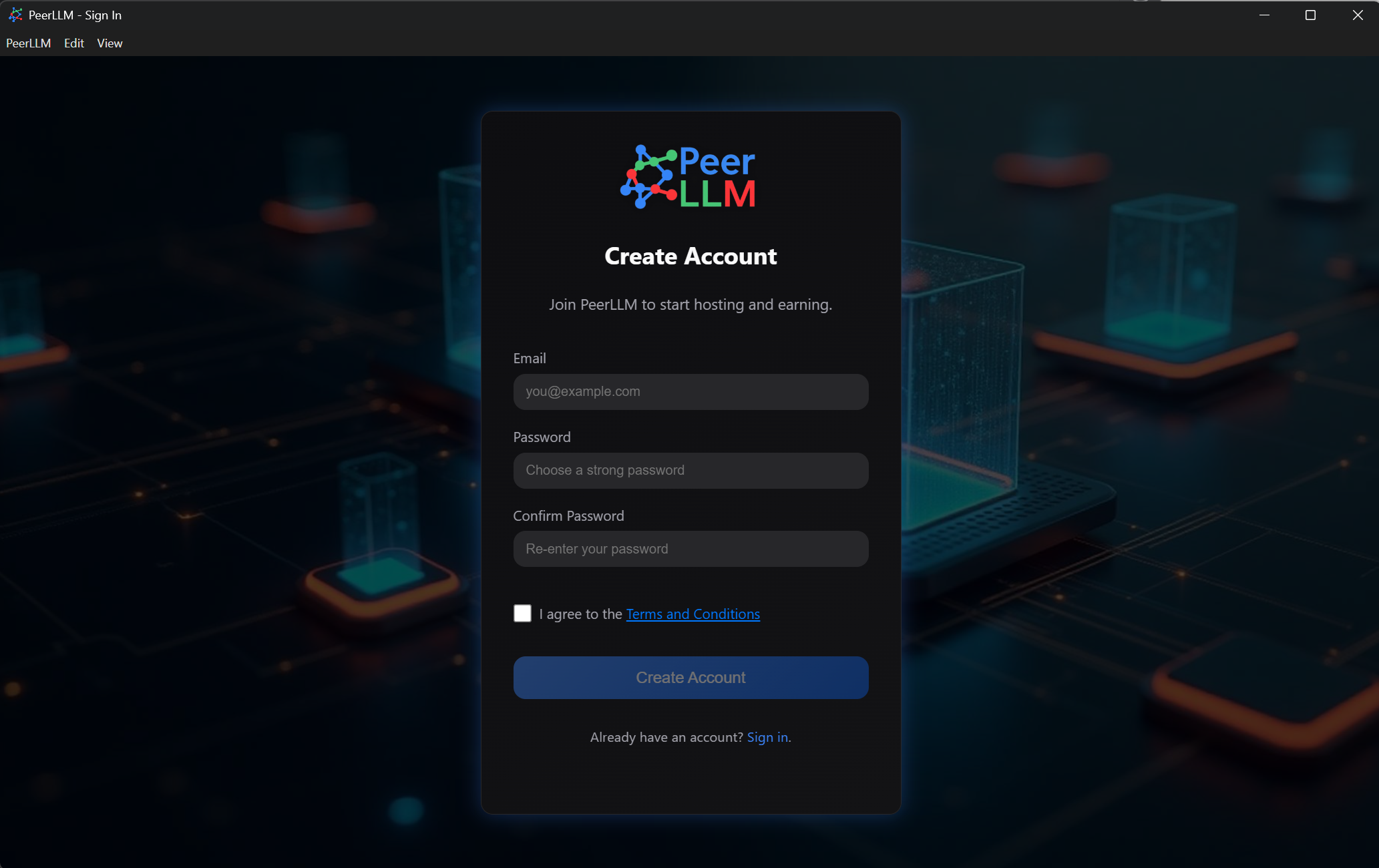

0. Open Enrollment (No More Forms)

PeerLLM is officially open to everyone.

You can now:

- Create an account instantly

- Log in from any device

- Reset your password

- Sync your experience across machines

No waitlist. No approval. No friction.

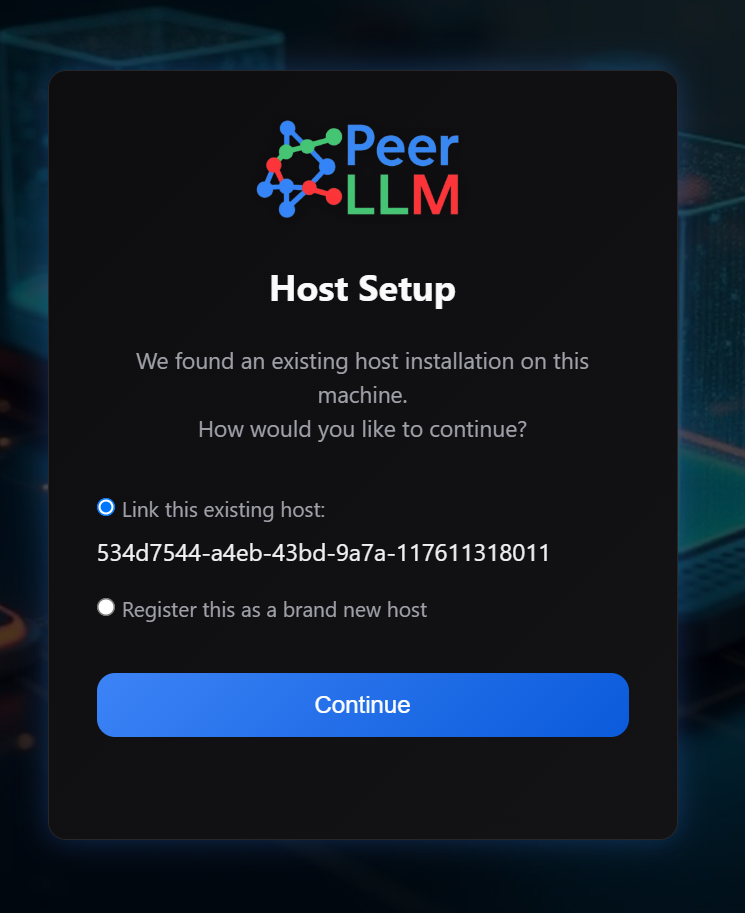

1. Link Your Hosts to Your Account

With accounts now active, you can connect your existing PeerLLM Hosts or create new ones with a single click.

Once linked, you unlock:

- Remote visibility

- Contributions tracking

- Version and activity insights

- Centralized management across devices

2. Monitor All Your Hosts in One Place

Every host you link appears in your dashboard at:

https://hosts.peerllm.com

From the portal, you can:

- View online/offline status

- Track contributions

- Manage model availability

- Sync preferences

- Prepare for future rewards

This is your control center for all things PeerLLM.

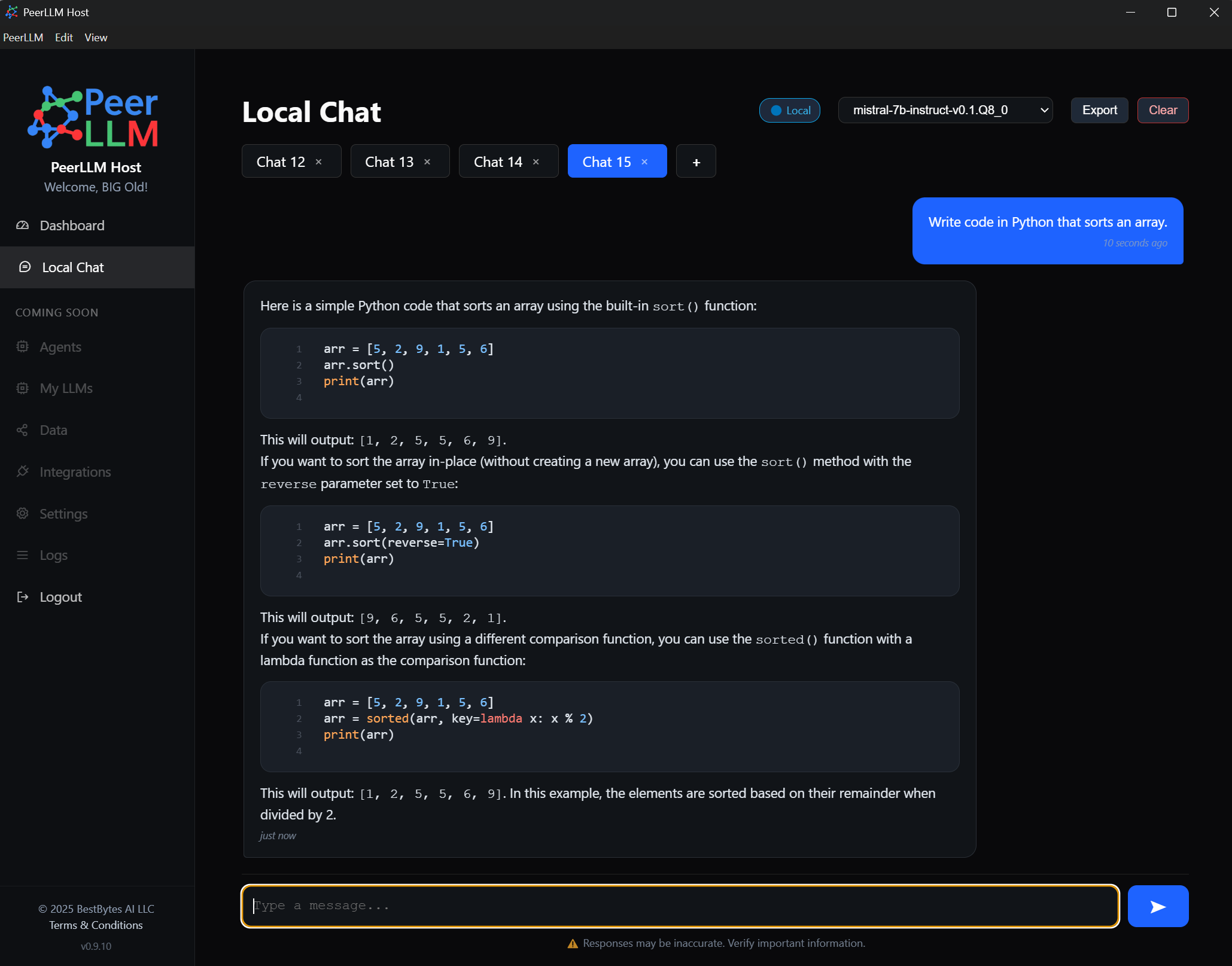

3. Chat With Your Host Locally

PeerLLM Host now lets you chat directly with LLMs on your machine — completely offline.

- Fully private

- Zero latency

- Works with any installed model

- Perfect for coding, writing, Q&A, and experiments

4. Download LLMs — Network Approved or Local-Only

The new LLM Explorer lets you browse and download models effortlessly:

- Approved Models → optimized for the PeerLLM network

- Local Models → any LLM you want, anytime

- Filters for size, architecture, quantization, and compatibility

You choose what runs locally and what’s shared with the network.

5. OpenAI API–Compatible Endpoint (via Orchestrator)

PeerLLM’s Orchestrator now exposes a fully OpenAI-compatible API endpoint, which means you can connect to it using:

- VS Code AI extensions

- IntelliJ / JetBrains IDEs via Connect plugins

- Third-party tools that support OpenAI APIs

- Custom applications with minimal configuration

If a tool supports the OpenAI API — it now supports PeerLLM.

This unlocks local AI development without vendors, limits, or cloud reliance.

⚡ 6. Enhanced Model Performance & Concurrency

PeerLLM Host v0.9.10 introduces major LLM performance upgrades:

✔ Shared Memory

Models now load once into memory and serve multiple conversations without reloading.

✔ Isolated KV Cache & Context

Each chat tab runs with its own private KV cache and independent context — zero leakage.

✔ More Conversations Per Host

With improved memory handling and better orchestration, hosts can now support more concurrent sessions than ever.

This makes PeerLLM faster, more stable, and more scalable — especially for multi-user or dev-tool scenarios.

7. A Cleaner, More Powerful LLM Management Experience

The Host dashboard has been upgraded to give you full control over your models:

- Enable or disable models instantly

- Manage approved vs. local-only models

- View download sizes, metadata, and compatibility

- Control model sharing behavior

- Access quick-launch options

The result is a smoother, clearer, more intuitive experience.

What’s Coming Next

PeerLLM v0.9.10 lays the foundation for the next generation of decentralized AI. Here’s what we’re building toward:

0. Payments for Contributors

Hosts will soon earn automated payouts for contributing compute to the network.

1. Business Applications Built on PeerLLM

Opening the door to a new ecosystem of apps, services, and agents built on top of the decentralized PeerLLM compute layer.

2. Decentralized Data — Get Paid for Your Knowledge

A new era where your knowledge, your experience, and your contributions can be tokenized and rewarded — privately, securely, and under your control.

Download PeerLLM Host v0.9.10

Choose your platform:

Built with passion by the PeerLLM Team