Stress-Testing Decentralized AI in Seattle’s Tech Ecosystem

0/ Why Seattle?

Seattle has one of the most vibrant and intellectually demanding tech ecosystems in the world. It is home to brilliant engineers, seasoned architects, and product leaders who have built systems used by billions of people every single day.

At these events, we met people from big tech, startups, and even the non-profit sector. Conversations were thoughtful, direct, and deeply technical. As experienced engineers tend to be, the reaction pattern was remarkably consistent. It began with skepticism. Then came curiosity. Then realization. And finally, excitement.

Seattle was the perfect place to stress-test the idea of decentralized AI. If the idea can withstand scrutiny here — among people who understand distributed systems, infrastructure trade-offs, and scale — then it can withstand scrutiny anywhere.

1/ First-Impressions

I worked intentionally to make sure my pitch takes no more than ten seconds. In rooms filled with engineers, clarity matters more than length.

The simplest way I describe PeerLLM is this:

“It’s Airbnb for your computer.”

That usually earns a smile and an immediate mental model. Once that lands, I refine it in closer conversations:

“Do you use your computer 100% of the time? Probably not. What if the unused portion of that compute could be shared on a distributed AI network — and potentially generate passive income?”

At that point, the idea clicks. People immediately visualize idle CPUs and GPUs being transformed into productive infrastructure. And once that visualization forms, the real questions begin.

2/ Questions

Those who leaned in — especially technical professionals — asked thoughtful and remarkably consistent questions. The skepticism was not dismissive; it was analytical. That made the conversations meaningful.

2.0/ Does It Actually Work?

PeerLLM is a decentralized AI compute network built around three essential personas: producers, hosts, and consumers.

Producers are developers who create high-quality LLMs. Hosts are individuals or organizations who contribute compute power by running those models on their machines. Consumers are developers or companies who use AI through our API.

I often describe it using a simple analogy: a farmer, a store owner, and a customer. The farmer grows the food. The store owner makes it available. The customer benefits from it. Each plays a distinct role, and the ecosystem functions only when all three participate.

Developers build models. Those models can be downloaded onto host machines and made available locally, within private networks, or globally through the PeerLLM network.

The generosity of the open-source AI community is what makes this possible. PeerLLM exists because open AI ecosystems exist. Our goal is to help close the economic loop — to allow people contributing compute or models to participate in the value being created.

That is the foundation of a real AI economy.

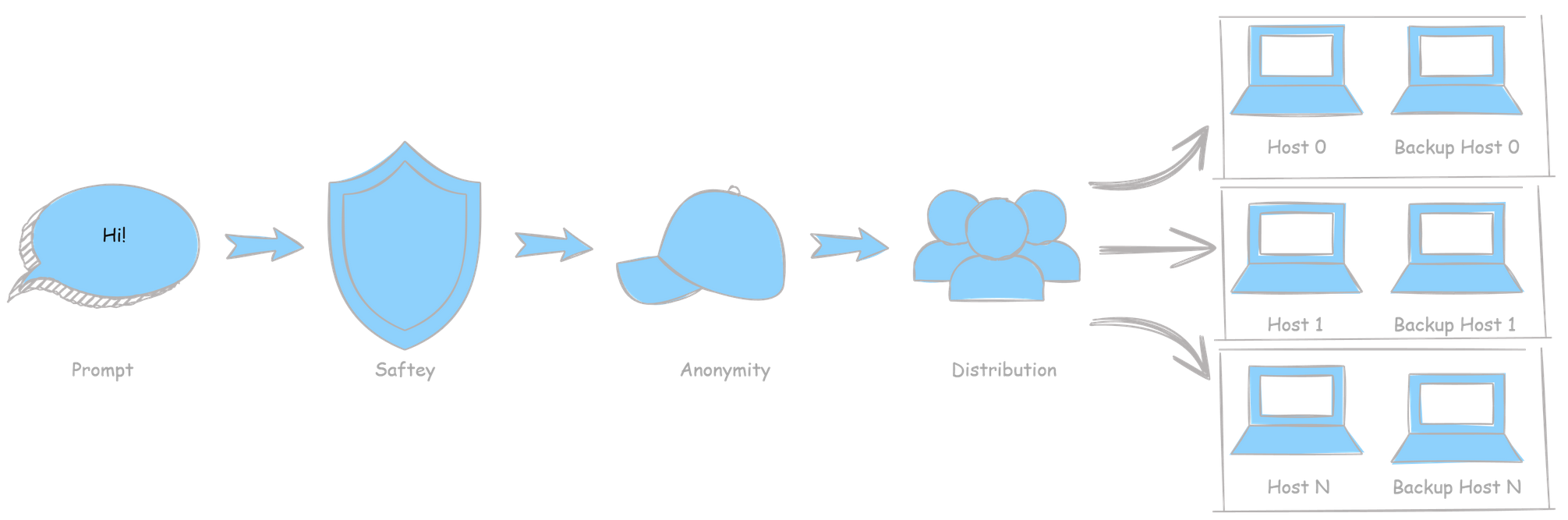

2.1/ What About Security?

Security is often the first serious technical concern — and rightly so.

In fast-paced event settings, the architecture diagram communicates the model more effectively than a long explanation.

At a high level, a user’s prompt does not travel directly to a host machine. Instead, it passes through multiple architectural layers, including security checks, anonymization, distribution logic, and orchestration.

Each host receives only a small, context-limited task. Individually, that task carries no meaningful or sensitive information. Only at the orchestration layer does the full response take shape.

This design enables decentralized inference while reducing risk exposure at any single node.

Incoming prompts are validated for safety. Outgoing responses are reviewed. Harmful content is blocked in alignment with PeerLLM’s usage policies. Anonymization layers strip away personal identifiers before distribution.

Security is not a feature added later. It is embedded in the architecture itself.

2.2/ How Would the Money Work?

The economic model is intentionally simple and transparent.

PeerLLM charges $1 for every 100,000 tokens processed. Of that dollar, $0.95 goes directly to the host providing compute. Hosts pay a $5 monthly subscription fee to participate in the network.

In practical terms, a host can break even with approximately one 500,000-token session.

Interestingly, the calculator drew the most attention during conversations. It demonstrated preparedness. It showed that this was not just a philosophical idea — but a mathematically considered system.

PeerLLM Earnings Calculator

Adjust the sliders to explore your potential monthly earnings as a PeerLLM Host.

Subscription cost: $5/month per Host (fixed)

Estimated Monthly Earnings:

$0

Disclaimer:

This calculator is provided for informational and educational purposes only. The values shown are

approximations based on theoretical calculations. Actual earnings may vary significantly depending on

uptime, hardware performance, consumer demand, and network conditions.

PeerLLM is currently in experimental and pre-release stages and does not generate revenue at this time.

No earnings, payouts, or performance results are guaranteed until after the official production release of the PeerLLM

Orchestration Network.

By using this calculator, you acknowledge that it does not constitute a financial promise, offer, or guarantee of income,

and all results should be treated as illustrative estimates only.

The model is designed so that hosts earn more than the platform. This is not about maximizing margins. It is about building participation and creating incentives that align with decentralization.

The goal is not extraction. The goal is distribution.

2.3/ What Stage Are You In?

PeerLLM is already fully functional end-to-end. The network operates, prompts flow, and distributed inference works.

The next iteration focuses on payment infrastructure. We are integrating PayPal to manage host subscriptions, host payouts, and consumer billing plans. These components are essential as we prepare for a general availability release of v1.0.0.

Our target launch date is March 1st.

2.4/ What Do You Need Today To Keep Going?

This was the most common and encouraging question I received.

At every event, we are looking for three types of people: hosts, developers, and integrators.

Hosts are individuals willing to download the application, sign up, and run LLMs on their local machines. They form the distributed backbone of the network.

Developers are model creators who want distribution and monetization opportunities. We aim to build an AI economy where models can be sold, subscribed to, and deployed across decentralized infrastructure.

Integrators and consumers are companies and developers who simply need AI for daily operations. PeerLLM supports the OpenAI API standard, making integration straightforward across existing applications and SDKs.

Developers can build on decentralized AI infrastructure without changing their workflow.

3/ Real Feedback from the Community

One of the most meaningful outcomes of this tour was hearing thoughtful public feedback from engineers who engaged deeply with the idea.

The reflections were not blind optimism. They were analytical and balanced. They highlighted the opportunity — tapping into unused global compute, creating alternative economic participation models, and reducing total reliance on centralized hyperscalers.

They also raised hard questions about long-term incentives, latency, performance at scale, and economic sustainability in an AI-dominated world.

Those are not trivial concerns. They are foundational questions.

And that is precisely why these conversations matter.

Decentralization is not interesting because it sounds idealistic.

It is interesting because serious engineers are beginning to ask whether alternative infrastructure models are not just possible — but necessary.

4/ The Bigger Shift

One interesting pattern from these events was realizing that PeerLLM is not alone in exploring decentralized AI infrastructure.

For example, companies like ReEnvision AI are thinking about decentralized compute from a business perspective — helping organizations rethink how AI workloads can be distributed rather than fully centralized.

The approaches may differ. The target audiences may differ. But the underlying signal is the same:

People are beginning to question whether hyperscaler-only infrastructure is the inevitable future of AI.

When independent builders start converging toward similar architectural ideas, it usually means something structural is happening.

Decentralization is not just an ideology.

It is becoming a design option.

Final Thought

What fascinated me most during this tour was not just the depth of the technical discussion. It was the consistency of the reaction.

PeerLLM, as an idea, was immediately understood. People grasped the value within seconds. The concept of unused compute becoming productive infrastructure did not require persuasion. It clicked.

Very few people walked away. Almost everyone stayed. And almost everyone had serious questions.

Questions about incentives.

Questions about latency.

Questions about sustainability.

Questions about economic structure in an AI-driven future.

No one dismissed the idea outright.

That matters.

In a future where intelligence becomes infrastructure, infrastructure becomes power. And power — if left concentrated — shapes opportunity, access, and economic participation.

But infrastructure can also be distributed.

It can be owned locally.

It can be shared.

It can be participatory.

PeerLLM is not just a product. It is an experiment in economic alignment. It is an exploration of whether the AI economy can be participatory rather than purely centralized.

Movements do not begin with perfection. They begin with possibility.

And in room after room, across conversations that began with skepticism and ended with engagement, one thing became clear:

Decentralized AI is not fringe.

It is timely.

We are building an alternative.

One host.

One developer.

One integrator.

One conversation at a time.

A Personal Note

Before closing, I want to acknowledge someone who made this entire tour possible.

My co-founder and partner, Kailu, drove to every single event, helped discover and organize them, captured the photos, and supported every conversation along the way. More importantly, he believes deeply in the mission and long-term vision behind PeerLLM.

Building something ambitious requires more than technical architecture. It requires conviction. It requires someone willing to show up consistently — even when the outcome is uncertain.

I’m grateful to be building this alongside someone who shares that belief.